Workers - Modular Chat Interface & Agent Runtime

This project provides a modular chat and agent execution framework, supporting various models and agent types. Explore the code in the GitHub repository or try the current demo at sophia.sh (email: [email protected], password: jane).

Goals

The objectives of the first iteration of this project were to:

- build a chat system supporting multiple models and agents with streaming and proper chat history management.

- create a modular agent server architecture that is easily extensible and independent of framework, language or runtime.

Chat Interface

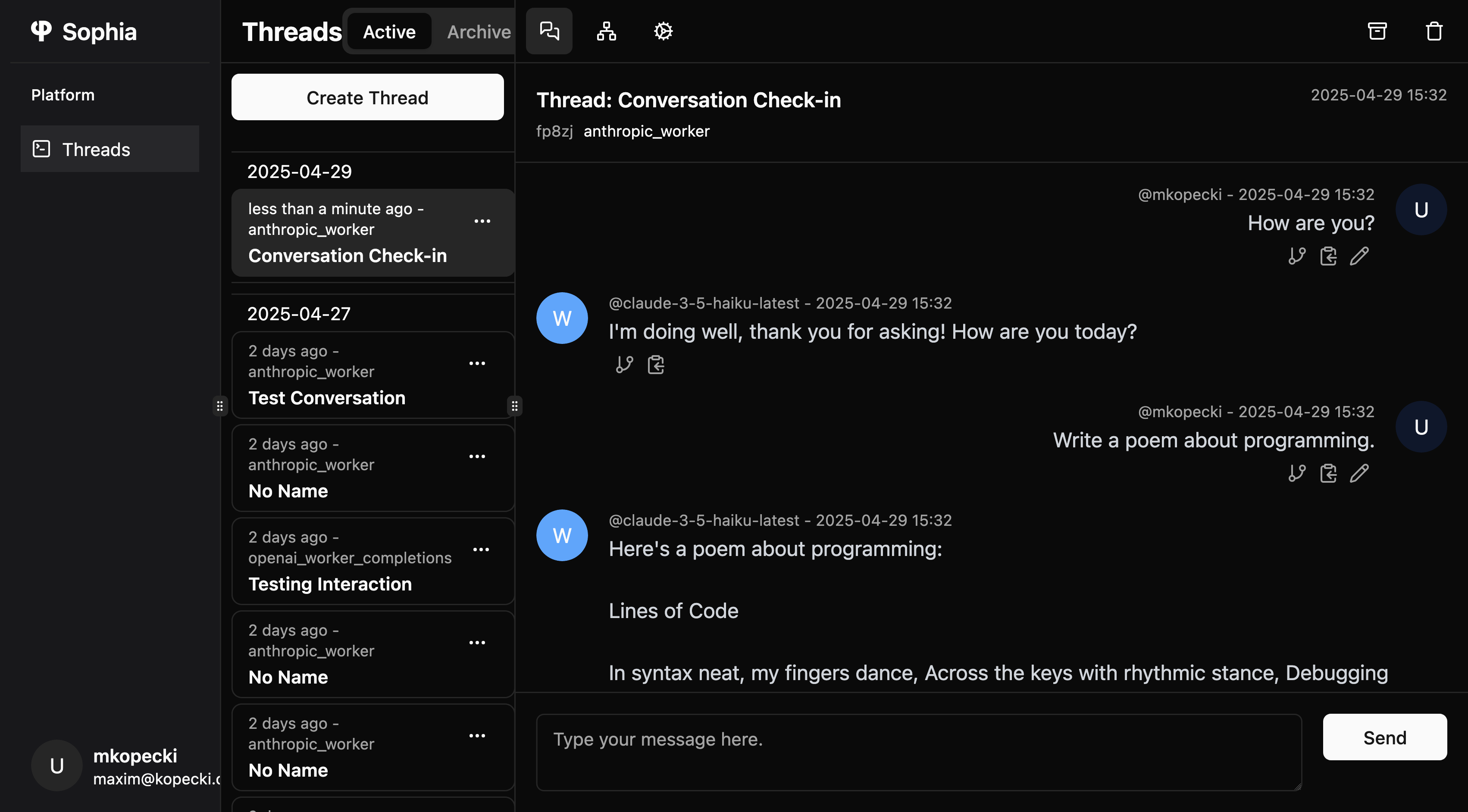

The chat interface features a basic thread-based chat system that supports streaming through Server-Sent Events (SSE).

Thread State & Versioning

Unlike traditional interfaces such as ChatGPT, the design principle here is that editing a message should never erase historical context.

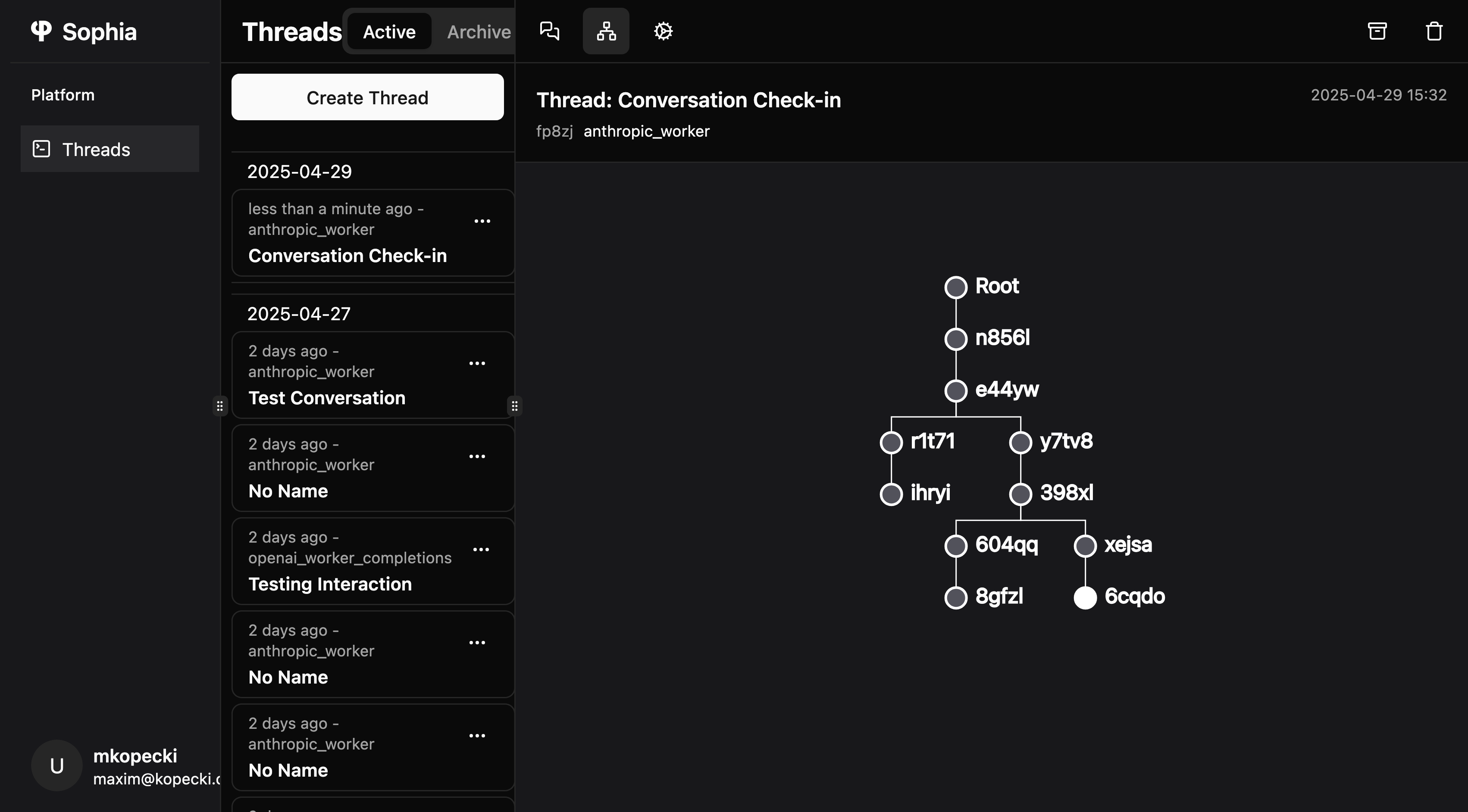

Internally we represent each thread as a tree of thread states:

- Thread Chain: thread state = parent state + new messages.

- Editing: editing a past message creates a new branch, leaving existing messages intact.

- Visualization: The UI visually represents this tree structure, allowing users to navigate to any state and resume the conversation from this point.

Agent Runtime

Core Idea

The implementation of our chat client and our execution layer shouldn't be tightly coupled to models or frameworks. The server therefore exposes two small, composable interfaces:

- Thread API: everything the chat frontend needs (auth, messages, SSE updates)

- Worker API: a thin execution layer for LLMs and agents

Adding a Worker

To integrate a new worker, we define a worker.toml configuration file, the code that runs the worker and a JSON schema for the configuration.

Folder Structure:

workers/

└─ anthropic_worker/

├─ worker.toml

├─ config_schema.json

└─ main.py

worker.toml:

id = "anthropic_worker"

name = "Anthropic Worker"

type = "python"

entrypoint_path = "main.py"

config_schema_path = "config_schema.json"

config_schema.json

{

"$schema": "http://json-schema.org/draft-04/schema#",

"type": "object",

"properties": {

"model": {

"enum": ["claude-3-5-haiku-latest"],

"type": "string"

}

},

"required": ["model"]

}

On startup the server discovers all folders inside of workers/, loads the TOML and exposes all available workers and their configuration schemas to the client. When a client creates a new run, it executes the entrypoint in a child process and passes a run_id. The worker script can then interact with the server through the API endpoints to retrieve its configuration, arguments, threads and stream new messages.